![]()

As artificially intelligence creeps its way into our smartphone experience, SoC vendors have been racing to improve neural network and machine learning performance in their chips. Everyone has a different take on how to power these emerging use cases, but the general trend has been to include some sort of dedicated hardware to accelerate common machine learning tasks like image recognition. However, the hardware differences mean that chips offer varying levels of performance.

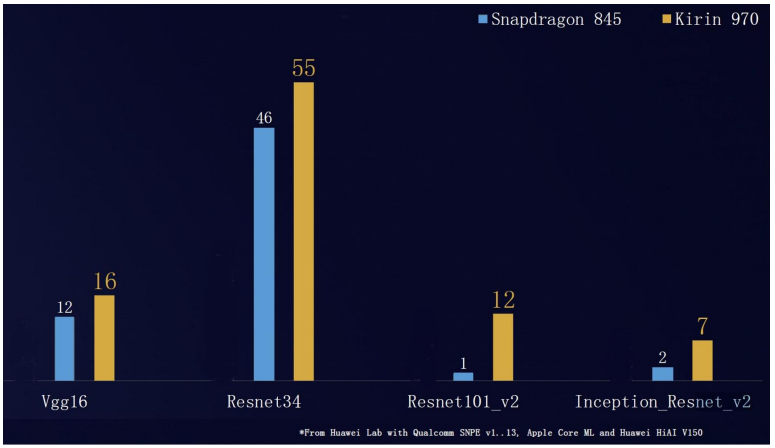

Last year it emerged that HiSilicon's Kirin 970 bested Qualcomm's Snapdragon 835 in a number of image recognition benchmarks. Honor recently published its own tests revealing claiming the chip performs better than the newer Snapdragon 845 as well.

We're a little skeptical of the results when a company tests its own chips, but the benchmarks Honor used (Resnet and VGG) are commonly used pre-trained image recognition neural network algorithms, so a performance advantage isn't to be sniffed at. The company claims up to a twelve-fold boost using its HiAI SDK versus the Snapdragon NPE. Two of the more popular results show between a 20 and 33 percent boost.

Regardless of the exact results, this raises a rather interesting question about the nature of neural network processing on smartphone SoCs. What causes the performance difference between two chips with similar machine learning applications?

DSP vs NPU approaches

The big difference between Kirin 970 vs Snapdragon 845 is HiSilicon's option implements a Neural Processing Unit designed specifically for quickly processing certain machine learning tasks. Meanwhile, Qualcomm repurposed its existing Hexagon DSP design to crunch numbers for machine learning tasks, rather than adding in extra silicon specifically for these tasks.

With the Snapdragon 845, Qualcomm boasts up to tripled performance for some AI tasks over the 835. To accelerate machine learning on its DSP, Qualcomm uses its Hexagon Vector Extensions (HVX) which speeds up 8-bit vector math commonly used by machine learning tasks. The 845 also boasts a new micro-architecture that doubles 8-bit performance over the previous generation. Qualcomm's Hexagon DSP is an efficient math crunching machine, but it's still fundamentally designed to handle a wide range of math tasks and has been gradually tweaked to boost image recognition use cases.

The Kirin 970 also includes a DSP (a Cadence Tensilica Vision P6) for audio, camera image, and other processing. It's in roughly the same league as Qualcomm's Hexagon DSP, but it is not currently exposed through the HiAI SDK for use with third-party machine learning applications.

The Hexagon 680 DSP from the Snapdragon 835 is a multi-threaded scalar math processor. It's a different take compared to mass matrix multiple processors for Google or Huawei.

HiSilicon's NPU is highly optimized for machine learning and image recognition, but is not any good for regular DSP tasks like audio EQ filters. The NPU is a bespoke chip designed in collaboration with Cambricon Technology and primarily built around multiple matrix multiply units.

You might recognize this as the same approach that Google took with its hugely powerful Cloud TPUs and Pixel Core machine learning chips. Huawei's NPU isn't as huge or powerful as Google's server chips, opting for a small number of 3 x 3 matrix multiple units, rather than Google's large 128 x 128 design. Google also optimized for 8-bit math while Huawei focused on 16-bit floating point.

The performance differences come down to architecture choices between more general DSPs and dedicated matrix multiply hardware.

The key takeaway here is Huawei's NPU is designed for a very small set of tasks, mostly related to image recognition, but it can crunch through the numbers very quickly — allegedly up to 2,000 images per second. Qualcomm's approach is to support these math operations using a more conventional DSP, which is more flexible and saves on silicon space, but won't quite reach the same peak potential. Both companies are also big on the heterogeneous approach to efficient processing and have dedicated engines to manage tasks across the CPU, GPU, DSP, and in Huawei's case its NPU too, for maximum efficiency.

Qualcomm sits on the fence

So why is Qualcomm, a high-performance mobile application processor company, taking a different approach to HiSilicon, Google, and Apple for its machine learning hardware? The immediate answer is probably that there just isn't a meaningful difference between the approaches at this stage.

Sure, the benchmarks might express different capabilities, but the truth there isn't a must-have application for machine learning in smartphones right now. Image recognition is moderately useful for organizing photo libraries, optimizing camera performance, and unlocking a phone with your face. If these can be done fast enough on a DSP, CPU, or GPU already, it seems there's little reason to spend extra money on dedicated silicon. LG is even doing real-time camera scene detection using a Snapdragon 835, which is very similar to Huawei's camera AI software using its NPU and DSP.

Qualcomm's DSP is widely used by third-parties, making it easier for them to start implementing machine learning on its platform.

In the future, we may see the need for more powerful or dedicated machine learning hardware to power more advanced features or save battery life, but at the moment the use cases are limited. Huawei might change its NPU design as the requirements of machine learning applications change, which could mean wasted resources and an awkward decision about whether to continue supporting outdated hardware. An NPU is also yet another bit of hardware third-party developers have to decide whether or not to support.

Qualcomm may well go down the dedicated neural network processor route in the future, but only if the use cases make the investment worthwhile. Arm's recently announced Project Trillium hardware is certainly a possible candidate if the company doesn't want to design a dedicated unit in-house from scratch, but we'll just have to wait and see.

Does it really matter?

When it comes to Kirin 970 vs Snapdragon 845, the Kirin's NPU might have an edge, but does it really matter that much?

There's no must-have use case for smartphone machine learning or "AI" yet. Even large percentage points gained or lost in some specific benchmarks isn't going to make or break the main user experience. All current machine learning tasks can be done on a DSP or even a regular CPU and GPU. An NPU is just a small cog in a much larger system. Dedicated hardware can give an advantage to battery life and performance, but it's going to be tough for consumers to notice a massive difference given their limited exposure to the applications.

As the machine learning market place evolves and more applications break through, smartphones with dedicated hardware will probably benefit — potentially they're a bit more future proofed (unless the hardware requirements change). Industry-wide adoption appears to be inevitable, what with MediaTek and Qualcomm both touting machine learning capabilities in lower cost chips, but it's unlikely the speed of an onboard NPU or DSP is ever going to be the make or break factor in a smartphone purchase.

from Android Authority https://ift.tt/2rg6cbE

via IFTTT

Aucun commentaire:

Enregistrer un commentaire